In this tutorial we will see how to easily create an event-driven application with Spring Cloud Stream and Kafka.

The application we will create will simulate sending and receiving events from a temperature sensor.

We will then see how to write Producer and Consumer, also Reactive, in Spring Cloud Stream.

A bit of theory

What is Spring Cloud Stream

Spring Cloud Stream is a Spring module that merges Spring Integration (which implements integration patterns) with

Spring Boot.

The goal of this module is to allow the developer to focus solely on the business logic of

event-driven applications, without worrying about the code to handle different types of message systems.

In fact, with Spring Cloud Stream, you can write code to produce/consume messages on Kafka, but the same code would also work if you used RabbitMQ, AWS Kinesis, AWS SQS, Azure EventHubs, etc!

Components of Spring Cloud Stream

Spring Cloud Stream has three main components:

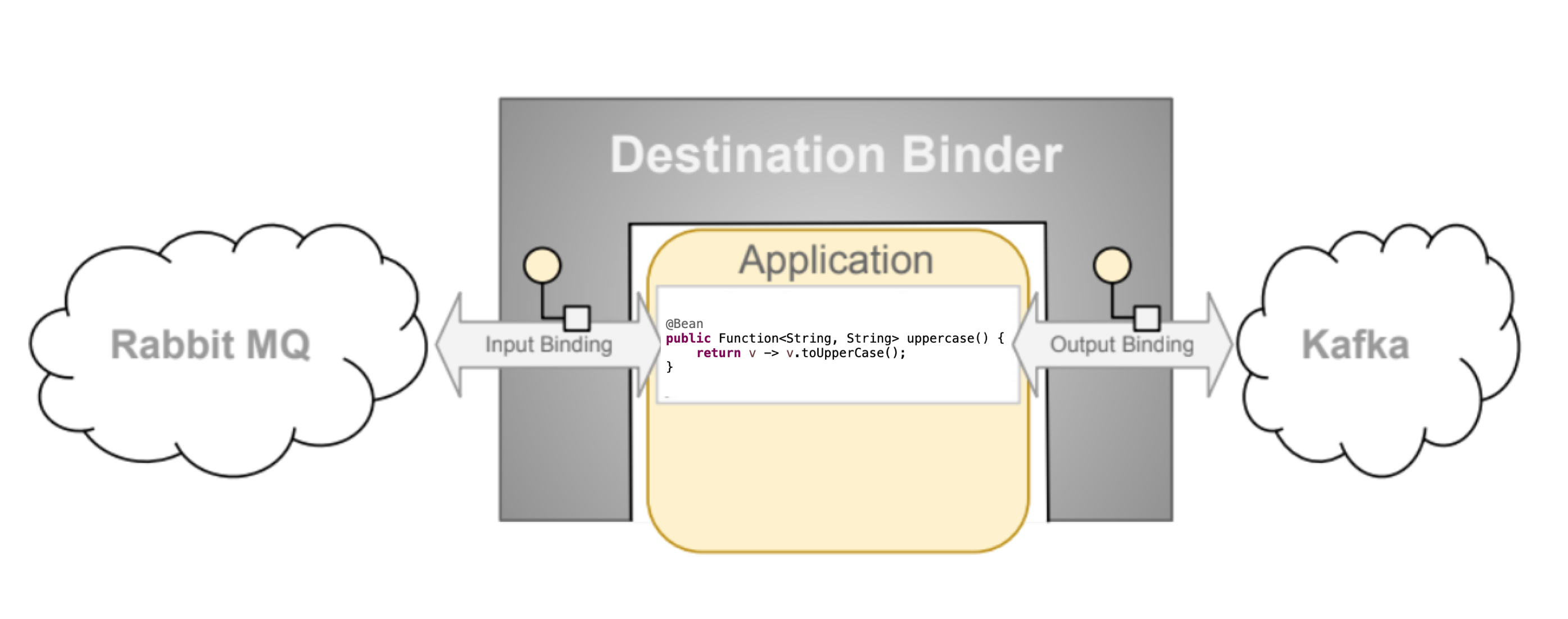

- Destination Binder: The component that provides integration with external message systems such as RabbitMQ or Kafka.

- Binding: A bridge between the external message system and the producers/consumers provided by the application.

- Message: The data structure used by producers/consumers to communicate with Destination Binders.

|

|---|

| Image by https://docs.spring.io/spring-cloud-stream/docs/current/reference/html/spring-cloud-stream.html#spring-cloud-stream-reference |

From the image above you can see that the bindings are input and output. You can combine multiple binders; for example you could read a message from RabbitMQ and write it to Kafka, creating a simple Java function (we will elaborate on this topic).

The developer will be able to focus solely on Business Logic in the form of functions, after which when choosing the message system to be used, he will have to import the dependency of the chosen binder.

There are binders managed directly by Spring Cloud Stream such as Apache Kafka, RabbitMQ, Amazon Kinesis, and others managed by external maintainers such as Azure EventHubs, Amazon SQS, etc. However, Spring Cloud Stream allows you to create custom binders.

Spring Cloud Stream also integrates the functionality of Partition and Message Groups mirroring those of Apache Kafka.

This means that the framework makes these two features available for any message system you choose

(so for example you will have them available even if you use RabbitMQ, despite the fact that this system does not natively support them).

Spring Cloud Stream from Spring Cloud Function

Spring Cloud Stream is based on Spring Cloud Function. Business logic can be written through simple functions.

The classic three interfaces of Java are used:

- Supplier: a function that has output but no input; it is also called producer, publisher, source .

- Consumer: a function that has input but no output, it is also called subscriber or sink.

- Function: a function that has both input and output, is also called processor.

And that is precisely why I am telling you about Spring Cloud Stream today. In previous versions, there was no use of this approach, which made the framework less intuitive.

This is an example of a processor taking a string as input and turning it into upperCase, in the previous version of the module:

@StreamListener(Processor.INPUT)

@SendTo(Processor.OUTPUT)

public String handle(Strign value) {

return value.toUpperCase();

}

The same example, with the current version:

@Bean

public Function<String, String> uppercase(Strign value) {

return v -> v.toUpperCase();

}

Now for some practice!

Step one: we create the project from Spring Initializr

We create the project skeleton via the Spring Initializr site: Spring Initializr (the link already contains the necessary configurations).

Download the project, un-zip it and open it. Let's analyze the pom.xml:

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-stream</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-stream-binder-kafka</artifactId>

</dependency>

The first dependency is the general Spring Cloud Stream dependency, while the second is used to give the configurations for the binder chosen. If we wanted to change binder, we would change this dependency.

Step two: create a model for the message

Create a sub-package model with the following record:

public record SensorEventMessage(

String sensorId,

Instant timestampEvent,

Double degrees

)

{}

This model represents a message sent by a temperature sensor.

Step three: create a consumer

Create a sub-package event, and inside it the following class:

@Configuration

@Slf4j

@RequiredArgsConstructor

public class SensorEventFunctions {

@Bean

public Consumer<SensorEventMessage> logEventReceived() {

return sensorEventMessage -> log.info("Message received: {}", sensorEventMessage);

}

}

Let's analyze the code:

- The class is annotated with @Configuration such that it contains beans (methods annotated with @Bean).

- The method, which is the actual consumer, is a simple Java function of type Consumer, which logs the message received. It is also annotated with @Bean. In Spring Cloud Function, in order to activate functions, they need only are beans.

Did you find references to Kafka? 🤭

Step four: create a producer

In the same class, create a method that acts as a producer:

@Bean

public Supplier<SensorEventMessage> sensorEventProducer() {

return () -> new SensorEventMessage("2", Instant.now(), 30.0);

}

This producer just simulates sending a message from a sensor with id 2.

That's it, we are done writing code! We do not need to write serializers and deserializers.

Everything is handled automatically by Spring Cloud Stream.

Step five: create the configurations for Spring Cloud Stream

Rename the application.properties file to application.yml and enter these configs:

spring:

application:

name: spring-cloud-stream

cloud:

function:

definition: logEventReceived;sensorEventProducer

stream:

bindings:

logEventReceived-in-0: #a consumer

destination: sensor_event_topic

group: ${spring.application.name}

sensorEventProducer-out-0: #a producer

destination: sensor_event_topic

Let's analyze the file:

- The spring.cloud.function.definition property is used to define the application functions annotated with @Bean. The functions are separated by a semicolon.

- The spring.cloud.stream.bindings property is used to declare for each producer/consumer its destination (in this case it is the name of a Topic Kafka).

The following naming convention is used:

- for input bindings:

<functionName> + -in- + <index>. - for output bindings:

<functionName> + -out- + <index>. The index field is always 0 when we want to exploit the concept of message groups.

- for input bindings:

- The consumer logEventReceived, in addition to having the destination field, also has the group field indicating the name of the Message Group.

Each consumer in the same Message Group, reads messages from a different partition, so that it shares messages with the other consumers, in a horizontal scaling manner.

Step six: start Kafka locally via Docker container

Use the Docker Compose downloaded from the Confluent site, so that you can start Kafka locally via Docker:

---

version: '3'

services:

zookeeper:

image: confluentinc/cp-zookeeper:7.3.0

container_name: zookeeper

environment:

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

broker:

image: confluentinc/cp-kafka:7.3.0

container_name: broker

ports:

# To learn about configuring Kafka for access across networks see

# https://www.confluent.io/blog/kafka-client-cannot-connect-to-broker-on-aws-on-docker-etc/

- "9092:9092"

depends_on:

- zookeeper

environment:

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: 'zookeeper:2181'

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_INTERNAL:PLAINTEXT

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://localhost:9092,PLAINTEXT_INTERNAL://broker:29092

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

KAFKA_TRANSACTION_STATE_LOG_MIN_ISR: 1

KAFKA_TRANSACTION_STATE_LOG_REPLICATION_FACTOR: 1

We do not have to run any commands to start Docker Compose.

In fact since version 3.1 of Spring Boot, with the spring-boot-docker-compose dependency, Docker Compose will be started

automatically at application startup!

Step seven: start the application

Let's start the application and analyze the logs. You will have such a log every second: Message received: SensorEventMessage[sensorId=2, timestampEvent=2023-02-27T19:40:08.367719Z, degrees=30.0].

This is because by default, in Spring Cloud Function, a polling is performed every second for the Supplier bean

(via the spring.integration.poller.fixed-delay property).

So the producer sends messages every second. The application correctly produces and consumes the messages!

What if we wanted to use the Reactive paradigm?

Step Eight: Spring Cloud Stream Reactive

With the new version of Spring Cloud Stream, there is no need to import a new of dependency.

The reactive version becomes:

@Bean

public Function<Flux<SensorEventMessage>, Mono<Void>> logEventReceived() {

return fluxEvent -> fluxEvent

.doOnNext(sensorEventMessage -> log.info("Message received: {}", sensorEventMessage))

.then();

}

@Bean

public Supplier<Flux<SensorEventMessage>> sensorEventProducer() {

return () -> Flux.fromStream(Stream.generate(() -> {

try {

Thread.sleep(5000);

return new SensorEventMessage("2", Instant.now(), 30.0);

} catch (Exception e) {

// ignore

}

return null;

})).subscribeOn(Schedulers.boundedElastic()).share();

}

Note that the consumer is now a Function that takes as input a Flux of messages and returns a Mono<Void>.

However, it can also be written this way:

@Bean

public Consumer<Flux<SensorEventMessage>> logEventReceived() {

return fluxEvent -> {

fluxEvent

.doOnNext(sensorEventMessage -> log.info("Message received: {}", sensorEventMessage))

.subscribe();

};

}

Since in WebFlux "nothing happens if the stream is unsubscribed," here we are forced to make subscribe explicit.

In the Reactive mode then, to create a consumer, we can use either the Function or the Consumer interface.

As for the producer, Spring Cloud Stream in the Reactive mode, does not automatically start polling every second, so we run Stream.generate... for that (and instead of sending the message every second, we send it every five).

Step nine: Producer from a synchronous stream

It is often necessary to send a message from a synchronous stream, such as after a REST request. At that point, the

Supplier no longer fits.

Spring Cloud Stream provides a bean of type StreamBridge for this use case!

We create a Producer bean that uses the StreamBridge class to send a message.

This producer will be invoked by a REST API :

@Component

@RequiredArgsConstructor

public class ProducerSensorEvent {

private final StreamBridge streamBridge;

public boolean publishMessage(SensorEventMessage message) {

return streamBridge.send("sensorEventAnotherProducer-out-0", message);

}

}

//REST API

@RestController

@RequestMapping("sensor-event")

@RequiredArgsConstructor

public class SensorEventApi {

private final ProducerSensorEvent producerSensorEvent;

@PostMapping

public Mono<ResponseEntity<Boolean>> sendDate(@RequestBody Mono<SensorEventMessage> sensorEventMessage) {

return sensorEventMessage

.map(producerSensorEvent::publishMessage)

.map(ResponseEntity::ok);

}

}

Nothing could be simpler. The send method of the StreamBridge class takes as input the name of the binding and the message to be sent.

Of course, we have to add this new binding in the application.yml file only in the bindings section

(we do not have to add it in the function section because trivially it is not a function):

sensorEventAnotherProducer-out-0: #another producer

destination: sensor_event_topic

To send the message, we can make a REST call like this: http POST :8080/sensor-event sensorId=1 timestampEvent=2023-02-26T15:15:00Z degrees=26.0

Bonus Step: Composition of Functions in Spring Cloud Function

In Spring Cloud Function, we can concatenate multiple functions. For example, in addition to logging the received message, we also want to save it to DB. To do that is, we write another function that takes the message as input and saves it to DB:

@Bean

public Function<Flux<SensorEventMessage>, Mono<Void>> saveInDBEventReceived() {

return fluxEvent -> fluxEvent

.doOnNext(sensorEventMessage -> log.info("Message saving: {}", sensorEventMessage))

.flatMap(sensorEventMessage -> sensorEventDao.save(Mono.just(sensorEventMessage)))

.then();

}

at that point, we modify the log function so that it returns the message again, after logging. The output of the logEventReceived function will be the input of the saveInDBEventReceived function:

@Bean

public Function<Flux<SensorEventMessage>, Flux<SensorEventMessage>> logEventReceived() {

return fluxEvent -> fluxEvent

.doOnNext(sensorEventMessage -> log.info("Message received: {}", sensorEventMessage));

}

To concatenate the two functions, you can use the | (pipe) operator in the application.yml:

spring:

application:

name: spring-cloud-stream

cloud:

function:

definition: logEventReceived|saveInDBEventReceived;sensorEventProducer

stream:

bindings:

logEventReceivedSaveInDBEventReceived-in-0: #a consumer

destination: sensor_event_topic

group: ${spring.application.name}

sensorEventProducer-out-0: #a producer

destination: sensor_event_topic

sensorEventAnotherProducer-out-0: #another producer

destination: sensor_event_topic

Note two things:

- The two functions logEventReceived and saveInDBEventReceived are concatenated with the pipe. The output of the first function becomes the input of the second one.

- The name of the binding becomes logEventReceivedSaveInDBEventReceived, which is the concatenation of the names of the two functions.

What if we wanted to add custom Kafka properties?

Customize properties for Kafka

To do this, just use the spring.cloud.stream.kafka.binder properties. We can add configurations

global, configurations restricted to all producers (spring.cloud.stream.kafka.binder.producerProperties) or

specific to a single producer (spring.cloud.stream.kafka.bindings.<channelName>.producer..).

The same for consumers.

For example:

spring:

application:

name: spring-cloud-stream

cloud:

function:

definition: logEventReceived|saveInDBEventReceived;sensorEventProducer

stream:

kafka:

binder:

brokers: localhost:9092

# kafka-properties: global props

# boootstrap.server: localhost:9092 Global props

consumerProperties: #consumer props

max.poll.records: 250

# producerProperties: producer props

bindings:

logEventReceivedSaveInDBEventReceived-in-0: #a consumer

destination: sensor_event_topic

group: ${spring.application.name}

sensorEventProducer-out-0: #a producer

destination: sensor_event_topic

sensorEventAnotherProducer-out-0: #another producer

destination: sensor_event_topic

Error Handling: retries and DLQ

To test Error Handling in Spring Cloud Stream, we modify the code of the DAO class like this:

public Mono<SensorEventMessage> save(Mono<SensorEventMessage> sensorEventMessageMono) {

return sensorEventMessageMono

.doOnNext(sensorEventMessage -> log.info("Message saving: {}", sensorEventMessage))

.map(sensorEventMessage -> {

if (sensorEventMessage.degrees() == 10.0) throw new RuntimeException("Error in saveMessage");

return sensorEventMessage;

})

.doOnNext(sensorEventMessage -> memoryDB.put(sensorEventMessage.sensorId(), sensorEventMessage))

.doOnNext(sensorEventMessage -> log.info("Message saved with id: {}", sensorEventMessage.sensorId()));

}

We simulate an error if the received event contains 10 degrees.

Imperative version

A consumer in Spring Cloud Stream, in the imperative version, will attempt to process the message three times in total,

in case the latter raises an exception.

The default value of maxAttempts can be overridden in this way:

bindings:

logEventReceivedSaveInDBEventReceived-in-0: #a consumer

destination: sensor_event_topic

group: ${spring.application.name}

consumer:

max-attempts: 3 #default is 3

You can also decide which exceptions to make retries on by populating the retryable-exceptions property like this:

bindings:

logEventReceivedSaveInDBEventReceived-in-0: #a consumer

destination: sensor_event_topic

group: ${spring.application.name}

consumer:

max-attempts: 3 #default is 3

retryable-exceptions:

java.lang.RuntimeException: true #retryable

java.lang.IllegalArgumentException: false #not retryable

If the exception is not made explicit in retryable-exceptions, it will be handled with retry.

As for DLQs, this functionality is also handled out-of-box by Spring Cloud Stream, in the imperative. Just add the following properties:

cloud:

function:

definition: logEventReceivedSaveInDBEventReceived;sensorEventProducer

stream:

kafka:

binder:

brokers: localhost:9092

consumerProperties: #consumer props

max.poll.records: 250

bindings:

logEventReceivedSaveInDBEventReceived-in-0:

consumer:

enable-dlq: true

dlq-name: sensor_event_topic_dlq

With enable-dlq we indicate to Spring that we want the consumer to have a DLQ.

With dlq-name we give the name of the topic DLQ.

To test Error Handling, we can send a message from the Kafka CLI.

- With Docker and the project running, run the command

docker exec -it broker bash. - Run the command

/bin/kafka-console-consumer --bootstrap-server broker:9092 --topic sensor_event_topic_dlq.

This way you will see the contents of the DLQ queue. - Open another shell and run the same command as in step 1.

- Run the command

/bin/kafka-console-producer --bootstrap-server broker:9092 --topic sensor_event_topic. - Now you can send the following message:

{ "sensorId": "test-id", "timestampEvent": "2023-06-09T18:40:00Z", "degrees": 10.0 }

We will see the following log message:

Message received: SensorEventMessage[sensorId=prova-id, timestampEvent=2023-06-09T18:40:00Z, degrees=10.0]

Message saving: SensorEventMessage[sensorId=prova-id, timestampEvent=2023-06-09T18:40:00Z, degrees=10.0]

Message received: SensorEventMessage[sensorId=prova-id, timestampEvent=2023-06-09T18:40:00Z, degrees=10.0]

Message saving: SensorEventMessage[sensorId=prova-id, timestampEvent=2023-06-09T18:40:00Z, degrees=10.0]

Message received: SensorEventMessage[sensorId=prova-id, timestampEvent=2023-06-09T18:40:00Z, degrees=10.0]

Message saving: SensorEventMessage[sensorId=prova-id, timestampEvent=2023-06-09T18:40:00Z, degrees=10.0]

org.springframework.messaging.MessageHandlingException: error occurred in message handler

[org.springframework.cloud.stream.function.FunctionConfiguration$FunctionToDestinationBinder$1@5c81d158],

failedMessage=GenericMessage [payload=byte[85], headers={kafka_offset=99,

kafka_consumer=org.apache.kafka.clients.consumer.KafkaConsumer@306f6122, deliveryAttempt=3,

kafka_timestampType=CREATE_TIME, kafka_receivedPartitionId=0, kafka_receivedTopic=sensor_event_topic,

kafka_receivedTimestamp=1686585227257, contentType=application/json, kafka_groupId=spring-cloud-stream}]

...

Caused by: java.lang.RuntimeException: Error in saveMessage

at com.vincenzoracca.springcloudstream.dao.SensorEventInMemoryDao.lambda$save$1(SensorEventInMemoryDao.java:26)

at com.vincenzoracca.springcloudstream.event.SensorEventImperativeFunctions.lambda$saveInDBEventReceived$2(SensorEventImperativeFunctions.java:45)

...

o.a.k.clients.producer.ProducerConfig : ProducerConfig values: ...

As can be seen from the logs, the message is processed three times in total before finally going to error and be sent to DLQ (you can also check the DLQ queue).

Reactive version

With the reactive version of Spring Cloud Stream, those previously set properties don't work!

One way to handle retries is DLQ could be:

- Have the consumer with only one function (no chaining).

- Wrapping the business logic of the consumer in a flatMap function, to have the message available to send in DLQ.

- Manage retries with the retry function of Reactor.

- Create a channel for the DLQ and send the message in error to it.

Here is the consumer reactive modified to handle retries and DLQs:

private static final String DLQ_CHANNEL = "sensorEventDlqProducer-out-0";

@Bean

public Function<Flux<Message<SensorEventMessage>>, Mono<Void>> logEventReceivedSaveInDBEventReceived() {

return fluxEvent -> fluxEvent

.flatMap(this::consumeMessage)

.then();

}

private Mono<SensorEventMessage> consumeMessage(Message<SensorEventMessage> message) {

return Mono.just(message)

.doOnNext(sensorEventMessage -> log.info("Message received: {}", sensorEventMessage))

.flatMap(sensorEventMessage -> sensorEventDao.save(Mono.just(sensorEventMessage.getPayload())))

.retry(2)

.onErrorResume(throwable -> dlqEventUtil.handleDLQ(message, throwable, DLQ_CHANNEL));

}

DlqEventUtil is a utility class that sends any message in DLQ:

@Component

@Slf4j

@RequiredArgsConstructor

public class DlqEventUtil {

private final StreamBridge streamBridge;

public <T> Mono<T> handleDLQ(Message<T> message, Throwable throwable, String channel) {

log.error("Error for message: {}", message, throwable);

streamBridge.send(channel, message);

return Mono.empty();

}

}

The application.yml file becomes:

spring:

application:

name: spring-cloud-stream

cloud:

function:

definition: logEventReceivedSaveInDBEventReceived;sensorEventProducer

stream:

kafka:

binder:

brokers: localhost:9092

consumerProperties: #consumer props

max.poll.records: 250

bindings:

logEventReceivedSaveInDBEventReceived-in-0: #a consumer

destination: sensor_event_topic

group: ${spring.application.name}

sensorEventProducer-out-0: #a producer

destination: sensor_event_topic

sensorEventAnotherProducer-out-0: #another producer

destination: sensor_event_topic

sensorEventDlqProducer-out-0: #DLQ producer

destination: sensor_event_topic_dlq

Testing Spring Cloud Stream applications

When you imported the project from Spring Initializr, the following dependency was also added to the pom:

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-stream-test-binder</artifactId>

<scope>test</scope>

</dependency>

Through it, we can easily test our consumers and producers. Let's see how:

@SpringBootTest

@Import(TestChannelBinderConfiguration.class)

class SensorEventTestsIT {

@Autowired

private InputDestination input;

@Autowired

private OutputDestination output;

@Autowired

private ObjectMapper objectMapper;

@Autowired

private ProducerSensorEvent producerSensorEvent;

@Autowired

private SensorEventDao sensorEventDao;

@Test

public void produceSyncMessageTest() throws IOException {

SensorEventMessage sensorEventMessage = new SensorEventMessage("3", Instant.now(), 15.0);

producerSensorEvent.publishMessage(sensorEventMessage);

byte[] payloadBytesReceived = output.receive(100L, "sensor_event_topic").getPayload();

assertThat(objectMapper.readValue(payloadBytesReceived, SensorEventMessage.class)).isEqualTo(sensorEventMessage);

}

@Test

public void producePollableMessageTest() throws IOException {

byte[] payloadBytesReceived = output.receive(5000L, "sensor_event_topic").getPayload();

SensorEventMessage sensorEventMessageReceived = objectMapper.readValue(payloadBytesReceived, SensorEventMessage.class);

assertThat(sensorEventMessageReceived.sensorId()).isEqualTo("2");

assertThat(sensorEventMessageReceived.degrees()).isEqualTo(30.0);

}

@DirtiesContext(methodMode = DirtiesContext.MethodMode.BEFORE_METHOD)

@Test

public void consumeMessageTest() throws IOException {

SensorEventMessage sensorEventMessage = new SensorEventMessage("3", Instant.now(), 15.0);

byte[] payloadBytesSend = objectMapper.writeValueAsBytes(sensorEventMessage);

input.send(new GenericMessage<>(payloadBytesSend), "sensor_event_topic");

StepVerifier

.create(sensorEventDao.findAll())

.expectNext(sensorEventMessage)

.expectComplete()

.verify();

}

}

By importing the TestChannelBinderConfiguration class, Spring provides us with InputDestination and OutputDestination beans,

which we can use to test consumer and producer respectively.

We don't need the actual binder; the framework provides us with a test binder.

Let us analyze the tests one by one:

- In the produceSyncMessageTest, we use our synchronous producer to send a message.

We verify with the OutputDestination that the message was actually sent on the destination (Kafka topic). - In the producePollableMessageTest, we do not send any message explicitly, since the producer is of type pollable. We wait five seconds to verify that the message was sent correctly (since polling is five seconds).

- In the consumeMessageTest, we send with the OutputDestination a message on the topic Kafka and later verify

that the same message has been saved to DB.

In addition, the method is annotated with @DirtiesContext(methodMode = DirtiesContext.MethodMode.BEFORE_METHOD), to clean up the context (and thus the DB), before the method is invoked.

Conclusions

In this article we saw how to use the new version of Spring Cloud Stream.

The fact that we can focus solely on business logic, writing simple Java functions, independent of the broker

used, certainly makes this framework very interesting. We could switch from Kafka to RabbitMQ, without

need to change the code we just wrote. What a powerhouse! We can also have several binders in the

same application.

Find the full code on my GitHub repo at the following link: GitHub.

More articles about Spring: Spring.

Articles about Docker: Docker.

Recommended books on Spring, Docker, and Kubernetes:

- Cloud Native Spring in Action: https://amzn.to/3xZFg1S

- Pro Spring 5 (Spring from zero to hero): https://amzn.to/3KvfWWO

- Pivotal Certified Professional Core Spring 5 Developer Exam: A Study Guide Using Spring Framework 5 (per certificazione Spring): https://amzn.to/3KxbJSC

- Pro Spring Boot 2: An Authoritative Guide to Building Microservices, Web and Enterprise Applications, and Best Practices (Spring Boot del dettaglio): https://amzn.to/3TrIZic

- Docker: Sviluppare e rilasciare software tramite container: https://amzn.to/3AZEGDI

- Kubernetes TakeAway: Implementa i cluster K8s come un professionista: https://amzn.to/3dVxxuP

References

- Cloud Native Spring in Action, capitolo 10

- https://docs.spring.io/spring-cloud-stream/docs/current/reference/html/

- https://cloud.spring.io/spring-cloud-stream-binder-kafka/spring-cloud-stream-binder-kafka.html#_configuration_options

- https://cloud.spring.io/spring-cloud-stream/multi/multi_spring-cloud-stream-overview-binders.html