The problem

Right now I am working with projects using many AWS services, such as DynamoDB, SQS, S3, etc.

One of the problems I have encountered working on Cloud technologies is to test these services also in

local.

Fortunately, there are tools such as Testcontainers and LocalStack that easily circumvent this problem.

Therefore, I thought I would write an article about it!

What is Testcontainers

Often, in years past, when we needed to do integration testing, we had to use libraries

that simulated the tools we would later use in production (such as databases, web servers, etc.).

For example, for database testing, we often used in-memory databases such as H2.

Although this allowed us to use a database during testing, the tests, however, were not entirely "true"

as we would definitely use a different database in production!

Thanks to the spread of container technology and in particular of Docker, libraries have been developed that allow us to

to create, during the testing phase, containers of the tools we will use in production, making testing more truthful.

Testcontainers is a Java library that allows just that: it uses Docker to create containers during testing and then

destroys them once the latter are finished.

Testcontainers already has integrations with the most widely used tools (databases, message queues, and more).

All we have to do is import into our project the Testcontainers library suitable for the tool we want to use.

What is LocalStack

LocalStack is a tool that, using container technology, enables local emulation of AWS services, such as AWS Lambda, S3, DynamoDB, Kinesis, SQS, SNS.

In this article I will show you how to test the DynamoDB service thanks to LocalStack and Testcontainers.

First step: initialization of the project

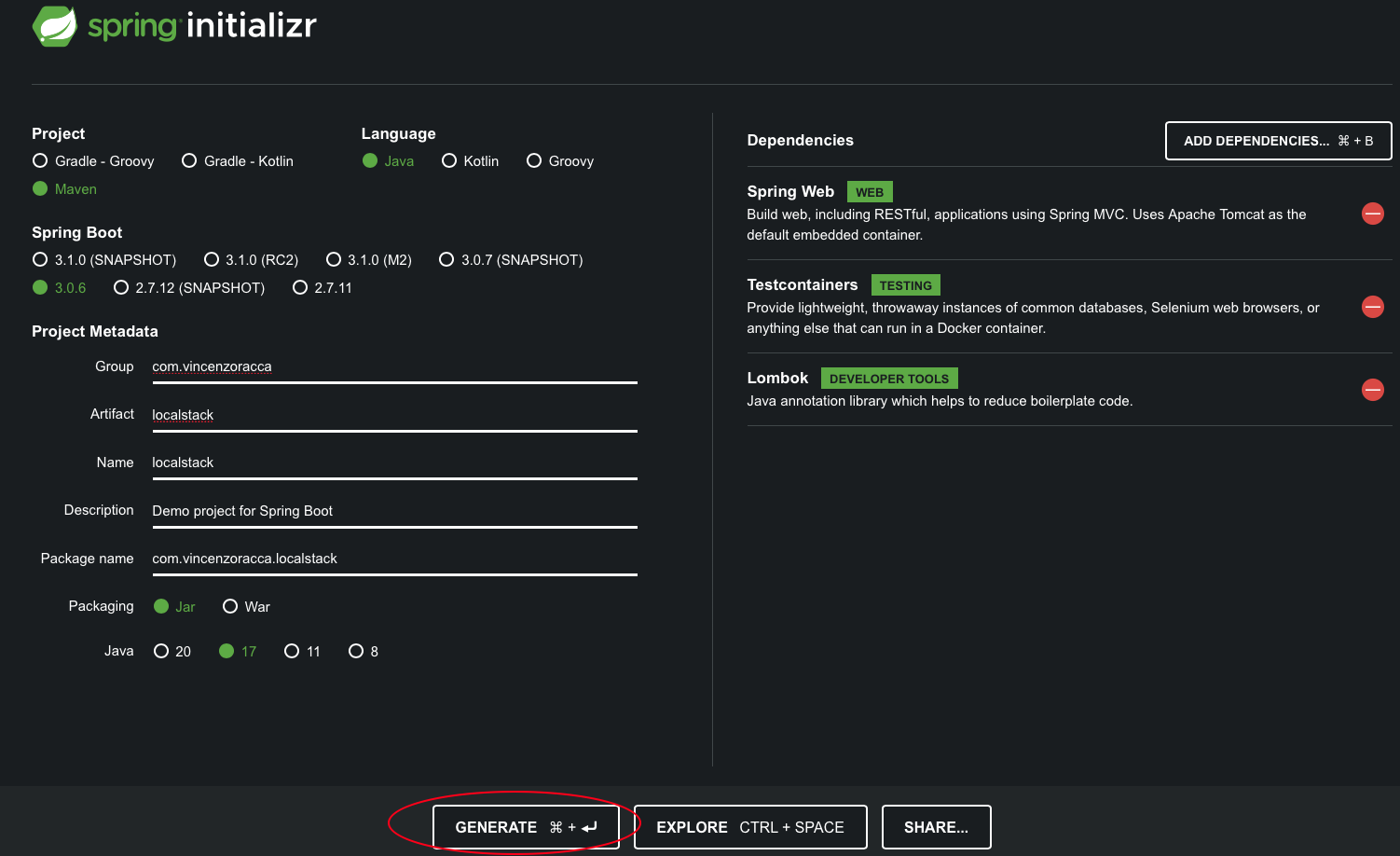

In this example we will use the Spring Initializr to create the project skeleton. If you are not using Spring Boot, feel free to skip this step.

Use the following configuration and then click "Generate":

Unzip the project you just downloaded and open it with an IDE.

In the pom.xml you need to add the library dependencies of the DynamoDB SDK and LocalStack:

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-java-sdk-dynamodb</artifactId>

<version>1.12.468</version>

</dependency>

<dependency>

<groupId>org.testcontainers</groupId>

<artifactId>localstack</artifactId>

<scope>test</scope>

</dependency>

Second step: create the DynamoDB configuration.

In the file application.properties, inside src/main/resources, add the following properties:

amazon.dynamodb.endpoint=http://localhost:4566/

amazon.aws.accesskey=key

amazon.aws.secretkey=key2

Create a config sub-package and now write a configuration class to connect to DynamoDB with the properties read from the application.properties file:

@Configuration

public class DynamoDBConfig {

@Value("${amazon.dynamodb.endpoint}")

private String amazonDynamoDBEndpoint;

@Value("${amazon.aws.accesskey}")

private String amazonAWSAccessKey;

@Value("${amazon.aws.secretkey}")

private String amazonAWSSecretKey;

@Bean

AmazonDynamoDB amazonDynamoDB() {

AwsClientBuilder.EndpointConfiguration endpointConfiguration = new AwsClientBuilder.EndpointConfiguration(amazonDynamoDBEndpoint, "");

return AmazonDynamoDBClientBuilder.standard()

.withEndpointConfiguration(endpointConfiguration)

.build();

}

@Bean

AWSCredentials amazonAWSCredentials() {

return new BasicAWSCredentials(

amazonAWSAccessKey, amazonAWSSecretKey);

}

}

Let us analyze the code:

- With @Value we read the properties from the application.properties and inject them into the class variables.

- The AmazonDynamoDB and AWSCredentials beans allow us to connect to DynamoDB via the injected properties.

Third step: write a model User

Create a model sub-package and write a User class:

@DynamoDBTable(tableName = "Users")

@Data

@NoArgsConstructor

@AllArgsConstructor

public class User {

private String id;

private String firstname;

private String surname;

@DynamoDBHashKey(attributeName = "Id")

@DynamoDBAutoGeneratedKey

public String getId() {

return id;

}

@DynamoDBAttribute(attributeName = "FirstName")

public String getFirstname() {

return firstname;

}

@DynamoDBAttribute(attributeName = "Surname")

public String getSurname() {

return surname;

}

}

In this class the only relevant things are annotations to map the latter to a DynamoDB table.

Specifically:

- By @DynamoDBTable(tableName = "Users"), we are saying that the User class maps the Users table of DynamoDB.

- With @DynamoDBHashKey(attributeName = "Id") we are saying that the id field is the primary key of the table. Also, with @DynamoDBAutoGeneratedKey we are saying that the key should be auto-generated by DynamoDB.

- With @DynamoDBAttribute we are listing the other attributes of the table.

Fourth step: write the DAO of User

Create the dao sub-package and write the following DAO interface:

public interface GenericDao<T, ID> {

List<T> findAll();

Optional<T> findById(ID id);

T save(T entity);

void delete(T entity);

void deleteAll();

}

This interface contains the common methods of a DAO.

Now create the UserDao interface:

public interface UserDao extends GenericDao<User, String> {

}

For our purposes, we don't need methods outside of the standard ones, so all we need is for this interface to extends GenericDao.

We can also generalize DAO operations performed on DynamoDB beyond the entity type. We then create an abstract class DynamoDBDao:

@Slf4j

public abstract class DynamoDBDao<T, ID> implements GenericDao<T, ID> {

protected final AmazonDynamoDB amazonDynamoDB;

private final Class<T> instanceClass;

private final Environment env;

public DynamoDBDao(AmazonDynamoDB amazonDynamoDB, Class<T> instanceClass, Environment env) {

this.amazonDynamoDB = amazonDynamoDB;

this.instanceClass = instanceClass;

this.env = env;

}

@Override

public List<T> findAll() {

DynamoDBMapper mapper = new DynamoDBMapper(amazonDynamoDB);

return mapper.scan(instanceClass, new DynamoDBScanExpression());

}

@Override

public Optional<T> findById(ID id) {

DynamoDBMapper mapper = new DynamoDBMapper(amazonDynamoDB);

T entity = mapper.load(instanceClass, id);

if(entity != null) {

return Optional.of(entity);

}

return Optional.empty();

}

@Override

public T save(T entity) {

DynamoDBMapper mapper = new DynamoDBMapper(amazonDynamoDB);

mapper.save(entity);

return entity;

}

@Override

public void delete(T entity) {

DynamoDBMapper mapper= new DynamoDBMapper(amazonDynamoDB);

mapper.delete(entity);

}

public void deleteAll() {

List<String> activeProfiles = Arrays.stream(env.getActiveProfiles()).toList();

if(activeProfiles.contains("test")) {

List<T> entities = findAll();

if(! CollectionUtils.isEmpty(entities)) {

log.info("Cleaning entity table");

entities.forEach(this::delete);

}

}

}

}

Through the use of Java's Generics and the instanceClass field, we are able to generalize all the DAO methods

standard.

The env field is used to figure out if you are using the application in a Test context, because we don't want

even use a deleteAll in production, but this method can be useful during testing, to clean up the data.

Now create the DAO dedicated to the User model:

@Repository

@Slf4j

public class UserDynamoDBDao extends DynamoDBDao<User, String> implements UserDao {

public UserDynamoDBDao(AmazonDynamoDB amazonDynamoDB, Environment env) {

super(amazonDynamoDB, User.class, env);

}

}

It is an empty class that extends DynamoDBDao and implements UserDao. So we could inject this implementation of UserDao into our business logic without binding the DAO to DynamoDB.

Fifth step: write the configuration class of LocalStack

It is time to test the DAO through integration tests! To do this, first create, inside the test package, a LocalStack configuration class, so that all test classes can import it:

@TestConfiguration

@TestPropertySource(properties = {

"amazon.aws.accesskey=test1",

"amazon.aws.secretkey=test231"

})

public class LocalStackConfiguration {

@Autowired

AmazonDynamoDB amazonDynamoDB;

static LocalStackContainer localStack =

new LocalStackContainer(DockerImageName.parse("localstack/localstack:1.0.4.1.nodejs18"))

.withServices(DYNAMODB)

.withNetworkAliases("localstack")

.withNetwork(Network.builder().createNetworkCmdModifier(cmd -> cmd.withName("test-net")).build());

static {

localStack.start();

System.setProperty("amazon.dynamodb.endpoint", localStack.getEndpointOverride(DYNAMODB).toString());

}

@PostConstruct

public void init() {

DynamoDBMapper dynamoDBMapper = new DynamoDBMapper(amazonDynamoDB);

CreateTableRequest tableUserRequest = dynamoDBMapper

.generateCreateTableRequest(User.class);

tableUserRequest.setProvisionedThroughput(

new ProvisionedThroughput(1L, 1L));

amazonDynamoDB.createTable(tableUserRequest);

}

}

Let us analyze the code:

- With @TestConfiguration we indicate to Spring that this is a configuration class only for the test scope.

- With @TestPropertySource we inject into the Spring test context, the indicated properties, which will override those of the application.properties.

- The variable of type LocalStackContainer represents the LocalStack container.

- With withServices we are telling LocalStack which AWS services we are interested in (in this case only DynamoDB).

- With withNetworkAliases we are giving a network alias to the container (we are not indicating the name of the container!).

- With withNetwork we are telling Testcontainers that the created container must be part of a custom network.

- By making this variable static, the container will be shared by all methods of the Test class.

- Also, being inside a @TestConfiguration class, this container will not only be shared between test methods of the same class, but also by all test classes (which import this configuration class).

- In the static block we start the container and dynamically value the property amazon.dynamodb.endpoint (which will change with each new run of the container).

- Also after the static block, the method annotated with @PostConstruct will be invoked, where we create the Users table of DynamoDB.

Sixth step: write the test class for UsedDao

Write the test class for UserDao:

@SpringBootTest

@Import(LocalStackConfiguration.class)

@ActiveProfiles("test")

class UserDaoTests {

@Autowired

private UserDao userDao;

@BeforeEach

public void setUp() {

userDao.deleteAll();

}

@Test

void saveNewElementTest() {

var userOne = new User(null, "Vincenzo", "Racca");

userDao.save(userOne);

List<User> retrievedUsers = userDao.findAll();

assertThat(retrievedUsers).hasSize(1);

assertThat(retrievedUsers.get(0)).isEqualTo(userOne);

}

@Test

void saveAnExistingElementTest() {

var userOne = new User(null, "Vincenzo", "Racca");

userDao.save(userOne);

List<User> retrievedUsers = userDao.findAll();

assertThat(retrievedUsers).hasSize(1);

assertThat(retrievedUsers.get(0)).isEqualTo(userOne);

assertThat(retrievedUsers.get(0).getFirstname()).isEqualTo("Vincenzo");

userOne.setFirstname("Enzo");

userDao.save(userOne);

retrievedUsers = userDao.findAll();

assertThat(retrievedUsers).hasSize(1);

assertThat(retrievedUsers.get(0)).isEqualTo(userOne);

assertThat(retrievedUsers.get(0).getFirstname()).isEqualTo("Enzo");

}

@Test

void deleteTest() {

var userOne = new User(null, "Vincenzo", "Racca");

userDao.save(userOne);

String id = userOne.getId();

assertThat(id).isNotNull();

Optional<User> retrievedUser = userDao.findById(id);

assertThat(retrievedUser.isPresent()).isTrue();

assertThat(retrievedUser.get()).isEqualTo(userOne);

userDao.delete(userOne);

Optional<User> userNotRetrieved = userDao.findById(id);

assertThat(userNotRetrieved.isPresent()).isFalse();

}

}

The relevant thing here is that we use the @Import annotation to import the LocalStack configuration class

we wrote earlier.

We also activate the test profile with @ActiveProfiles("test") so that we can use the deleteAll.

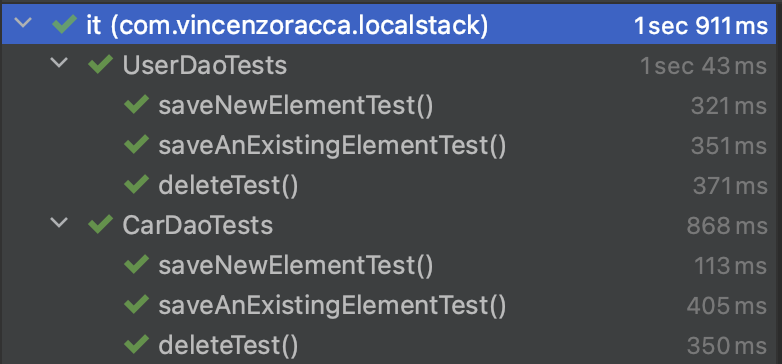

Now run all the tests using your IDE or using, from the root of the project, the command: mvnw clean test.

All tests were positive!

Conclusions

In this article you saw how to easily create "true" integration tests using Testcontainers

and LocalStack, so that a DynamoDB container can be used for testing.

Find the full code on my GitHub repo at the following link:

github.com/vincenzo-racca/testcontainers.

More articles on Spring: Spring.

More articles on Docker: Docker.

Recommended books on Spring, Docker, and Kubernetes:

- Pro Spring 5 (Spring da zero a hero): https://amzn.to/3KvfWWO

- Pivotal Certified Professional Core Spring 5 Developer Exam: A Study Guide Using Spring Framework 5 (per certificazione Spring): https://amzn.to/3KxbJSC

- Pro Spring Boot 2: An Authoritative Guide to Building Microservices, Web and Enterprise Applications, and Best Practices (Spring Boot del dettaglio): https://amzn.to/3TrIZic

- Docker: Sviluppare e rilasciare software tramite container: https://amzn.to/3AZEGDI

- Kubernetes TakeAway: Implementa i cluster K8s come un professionista: https://amzn.to/3dVxxuP