By default, Docker containers can communicate with the outside world, but not vice versa.

To expose the port of a container to the outside world, you can map it via the -p flag when

flag when creating a container. For example with the command: docker run --name nginx -d -p 8080:80 nginx

you are creating an nginx container, and you are mapping port 8080 of the Docker host to port 80 of the container.

But how does communication between containers work?

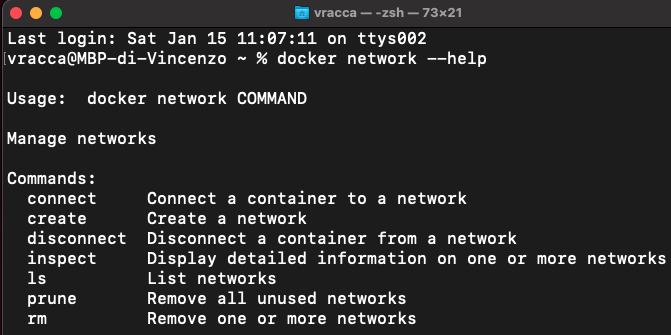

The network command and Docker's default networks

The docker network command allows you to manage networks in Docker. In particular, running the command

you can see what commands are provided:

The commands available are few and clear.

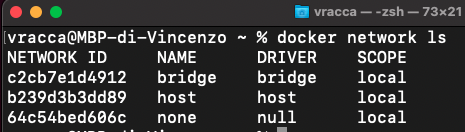

Try displaying the list of networks with the command docker network ls

By default, the list contains three networks:

- the bridge network, called docker0, is the network that containers use by default. Containers are connected to the same network and can therefore communicate with each other. In addition, this also offers isolation towards the world and all containers that are not part of this network.

- the host network, is not a real network; a container that is part of this network, in practice connects to the Docker Host network (functionality not available on Docker Desktop).

- the none network is also not a real network; it does not connect the container to any network.

If you create two NGINX containers, for example by executing the commands: docker run --name nginx1 -d nginx docker run --name nginx2 -d nginx

you can see, with the command docker inspect nginx1 and docker inspect nginx2 that they are part of the same network.

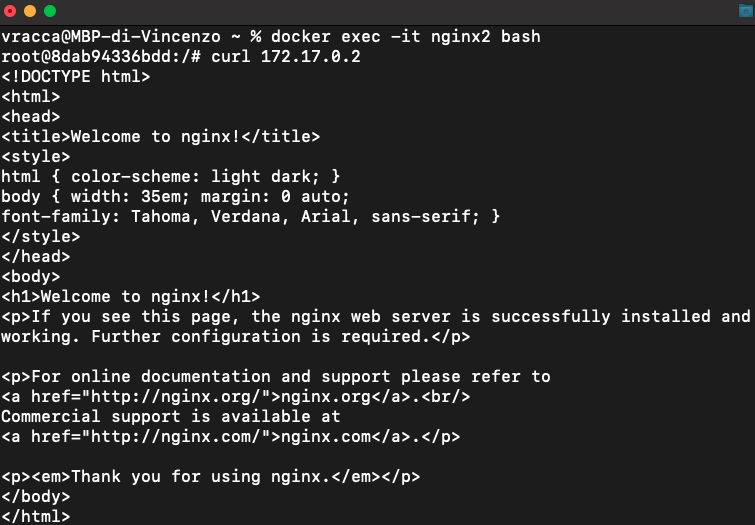

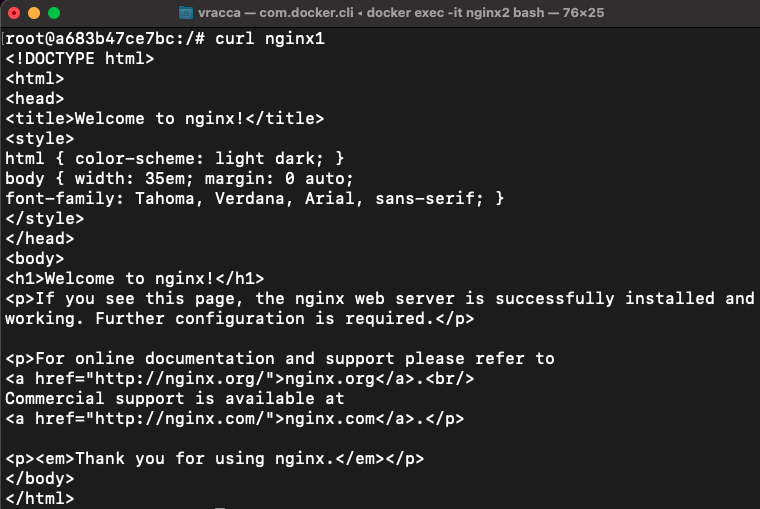

In fact, entering the nginx2 container, by the command docker exec -it nginx2 bash, you can successfully execute

a cURL on the Ip of the nginx1 container:

Even if the two containers are part of the same network and can therefore "ping" each other, this does not solve a common use case: for example, a web application container that needs to communicate with a database container.

common use case: for example, a web application container that needs to communicate with a database container.

Since containers are ephemeral, and since when we restart a container it can change IPs, we can't have the web application communicate with the database via the database.

communicate with the database via the database's IP address, as it is not fixed.

In the following paragraph, you will see how to solve this problem.

Linking between containers: the --link flag (deprecated)

In the paragraph

Persistence in Docker containers: volumes and bind mounts,

you have created two containers to see the functionality of the volumes: a postgres container and a pgAdmin container.

The postgres container was created with the following command: docker run --name postgres -e POSTGRES_USER=user -e POSTGRES_PASSWORD=password -e POSTGRES_DB=mydb -p 5432:5432 -d postgres:13.5

The -p flag allows you to map a Docker Host port to a container port. Why have we used this?

So that the pgAdmin container can communicate with the database.

The pgAdmin container was created with the following command: docker run --name=pgadmin -e PGADMIN_DEFAULT_PASSWORD=user -e PGADMIN_DEFAULT_EMAIL=admin@admin.com -p 5050:80 -d dpage/pgadmin4

Here again you create a port mapping: you map port 5050 of your host to port 80 of the container.

By doing so, you can access pgAdmin from localhost:5050.

You are actually accessing the postgres database using only pgAdmin: ideally, you should avoid the mapping

-p 5432:5432 mapping and enter the hostname of the postgres container directly. Also, if you remove that mapping,

you would avoid exposing the postgres database to the outside world, adding an extra degree of security. Let's see how!

A legacy feature of Docker is to link containers via the --link flag: --link <name or id>:alias

where name is the name of a container and alias an alias for the name of the link, or: --link <name or id>

in this case the alias will be the same as the container name.

This flag allows, when creating a container with the docker run command, to link it to a specific container.

But what does "link" mean? Let's see it with an example!

Destroy and recreate the nginx2 container: docker stop nginx2 && docker rm nginx2 && docker run --name nginx2 -d --link nginx1 nginx.

Now try entering the nginx2 container again and this time run a cURL like this:

This time the container can perform a cURL using the name of the linked container directly, i.e. nginx1.

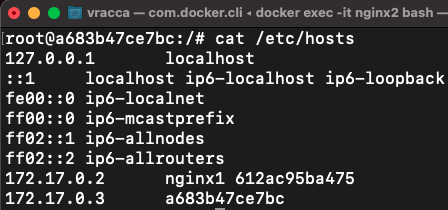

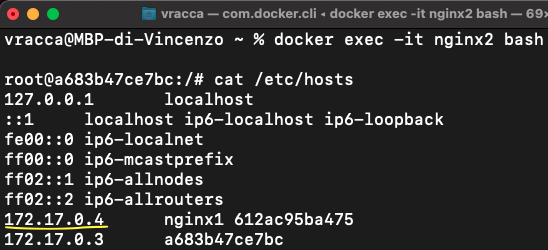

And that's exactly what the --link flag does. If you follow the cat command on the hosts file of the nginx2 container,

you can see that the ip_nginx1->nginx1 mapping has been inserted by Docker:

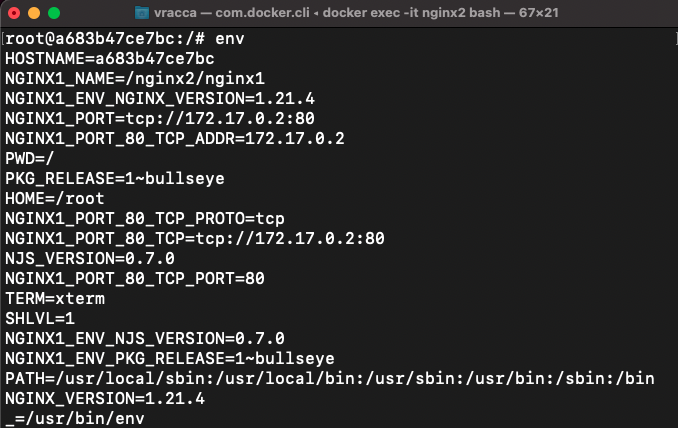

In addition, several environment variables indicating various information about the nginx1 container have also been added:

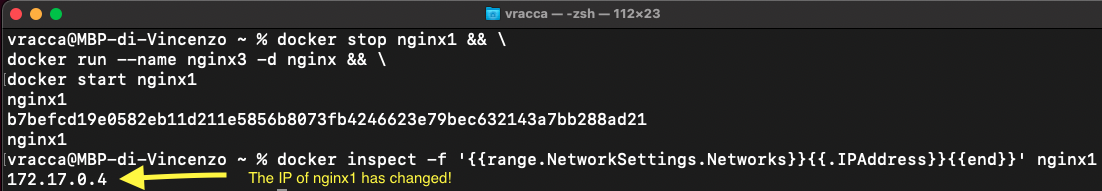

Note that the IP of the nginx1 container is 172.17.0.2. But what would happen if I shut down the nginx1 container, created an nginx3 container and then start the nginx1 container again? Let's see!

The nginx1 container has changed IP! Will this be a problem now for the nginx2 container?

Obviously no problem! Docker has also updated the IP of nginx1 to nginx2. Otherwise there would be no advantage of using this flag.

Similarly then, you can connect the postgres container with the pgAdmin container, without having the need to to expose the database to the outside world.

However, with this flag, environment variables are also shared between containers, which is not a good thing.

However, this flag has been deprecated in favour of custom networks, so its use should be avoided.

Creation of custom networks

You can create custom networks with the command docker network create <network_name>.

Two containers that are part of the same custom network will be able to communicate with each other and will be isolated

from other Docker networks, including the default docker0 network.

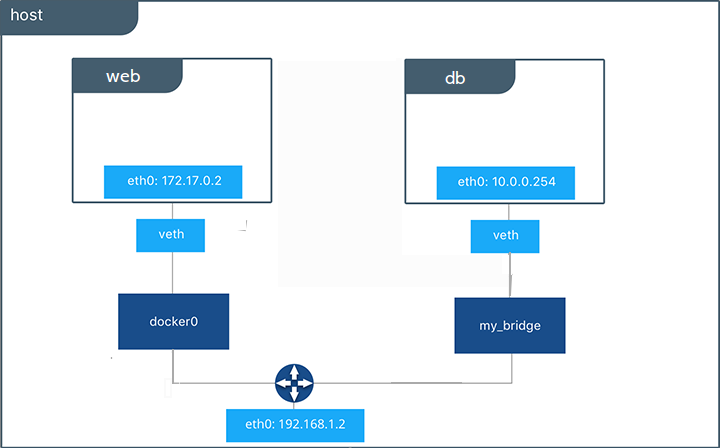

The figure above, taken from the official Docker documentation, shows that the docker0 network has a different network address from the my_bridge custom network. different from the custom my_bridge network.

Some differences between the default bridge network, docker0, and a custom bridge network:

- Containers that are part of the docker0 network can only access any other container on the same network via an

IP address (unless you use --link).

A container in a custom network can communicate with other containers in the same custom network via their names or aliases. - If you do not specify the --network flag when creating containers, they will be part of the docker0 network.

By default, containers are not isolated from each other.

By specifying a custom network, only containers in the same custom network can communicate with each other.

Create a network called nginx_net:

docker network create nginx_net.

Perform an inspect on it:

docker network inspect nginx_net

[

{

"Name": "nginx_net",

"Id": "0165d657ff0af159642158c5adf0c4f171eeae00376dd69fcae2b22e4ec4ffb4",

"Created": "2022-01-15T14:52:11.414646094Z",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.18.0.0/16",

"Gateway": "172.18.0.1"

}

]

},

...

In the subnet field we immediately see that the network is 172.18.0.0 which is different from that of docker0, which in my case is

172.17.0.0.

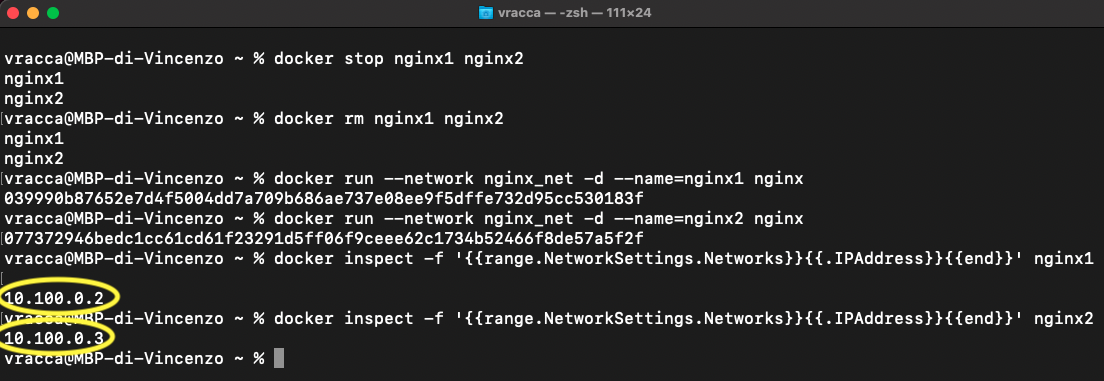

If you wish, you can also set the subnet during network creation:

docker network rm nginx_net

docker network create --subnet 10.100.0.0/16 nginx_net

Now create the nginx1 and nginx2 containers again, this time using the nginx_net for both,

with the flag --network <network_name>.

In this way, the containers created are on the same network and are also isolated from other containers that are

not part of the latter.

If you try again to run the command curl nginx1 from the nginx2 container, you can verify that it works without problems.

So, the right way to make the postgres container communicate with the pgAdmin container is:

- create a custom network

- create the postgres container using the custom network

- create the pgAdmin container using the custom network.

At that point, in the screen of pgAdmin where you set the connection to the db, we can enter as hostname the name of the container postgres.

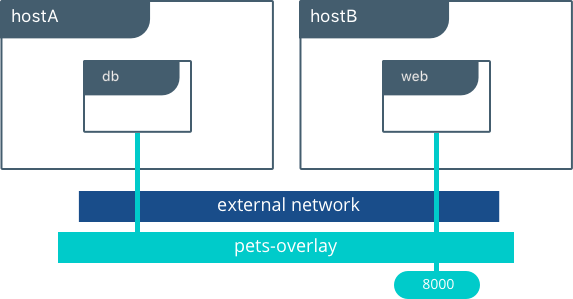

Hint at advanced Docker networking

When you displayed the list of available networks, in addition to the NAME column, there was also a column called DRIVER.

Docker's networking is created using drivers. As you've already seen, Docker creates three networks by default

using the bridge, host and none drivers.

The bridge driver allows you to create virtual private networks within the same Docker daemon.

Custom networks created with the docker network create command use the bridge driver by default.

However, containers from different Docker daemons can communicate with each other using the overlay driver.

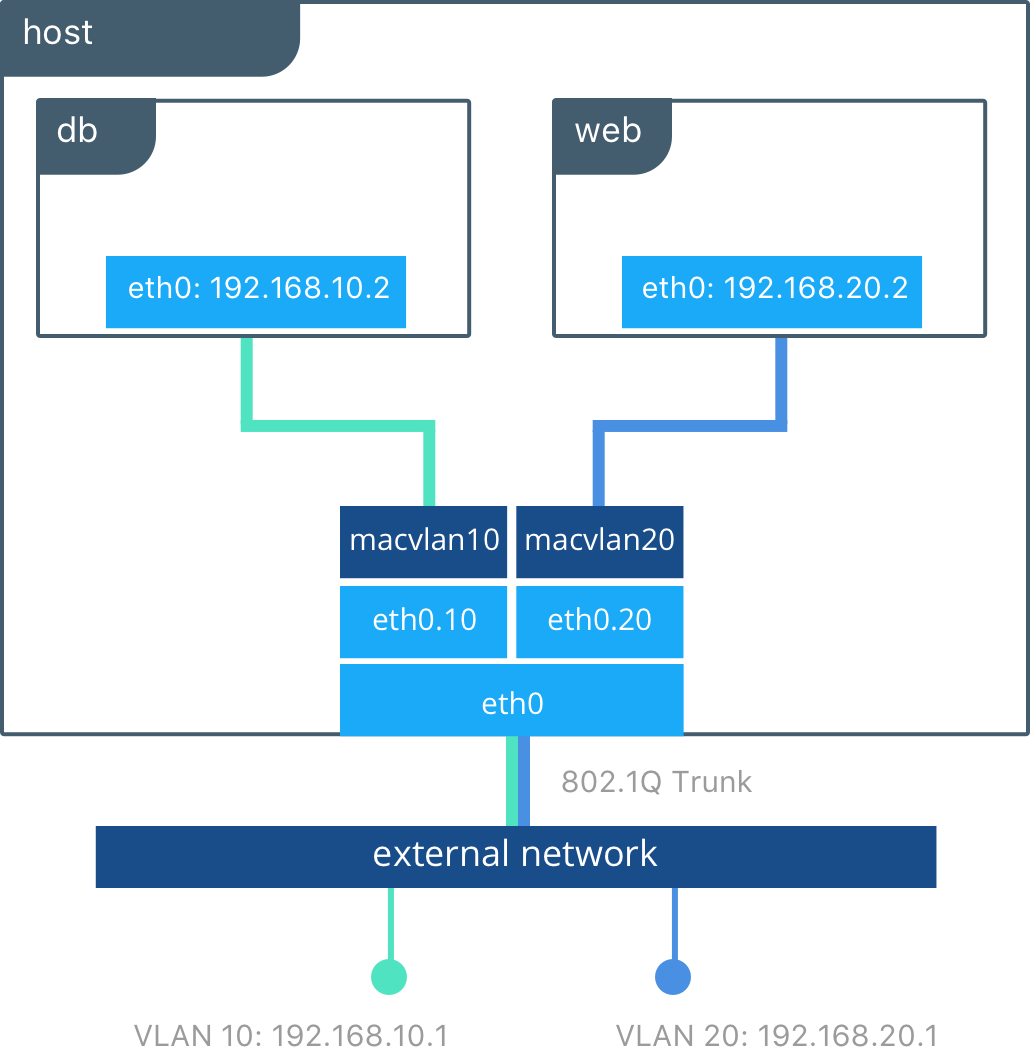

It is also possible to create networks by directly managing container MAC addresses via the macvlan driver.

If you want to learn more about these two drivers, these are the links to the official docs:

https://docs.docker.com/network/overlay/ e https://docs.docker.com/network/macvlan/

Conclusions

In this article you have seen how networking works in Docker and how to make two (or more) containers communicate.

You have seen the use of the --link flag, which is deprecated and should be avoided. You have learned how to create a custom

network and how to connect containers to it with the --network flag. This is the recommended approach to use.

Official docs on --link: https://docs.docker.com/network/links/.

Official docs on networking in Docker: https://docs.docker.com/network/.

My articles on Docker: Docker